Navigating the Expanding AI Universe: Deepening Our Understanding of MCP, A2A, and the Imperative of Non-Human Identity Security

By

Ben Kim

November 17, 2025

9-minute read

By

Ben Kim

November 17, 2025

9-minute read

.png)

The rapid advancements in Artificial Intelligence are not just theoretical anymore; they are manifesting in practical protocols like Anthropic’s Model Context Protocol (MCP) and Google’s Agent2Agent Protocol (A2A). These protocols are paving the way for a future where AI agents can interact seamlessly with external tools and collaborate directly with each other, promising unprecedented levels of automation and efficiency. However, this increased sophistication and interconnectedness inherently amplify security challenges, particularly concerning non-human identities (NHIs). In this expanded discussion, we will delve deeper into the intricacies of MCP and A2A, their potential security implications, and why securing NHIs is increasingly critical in this evolving landscape.

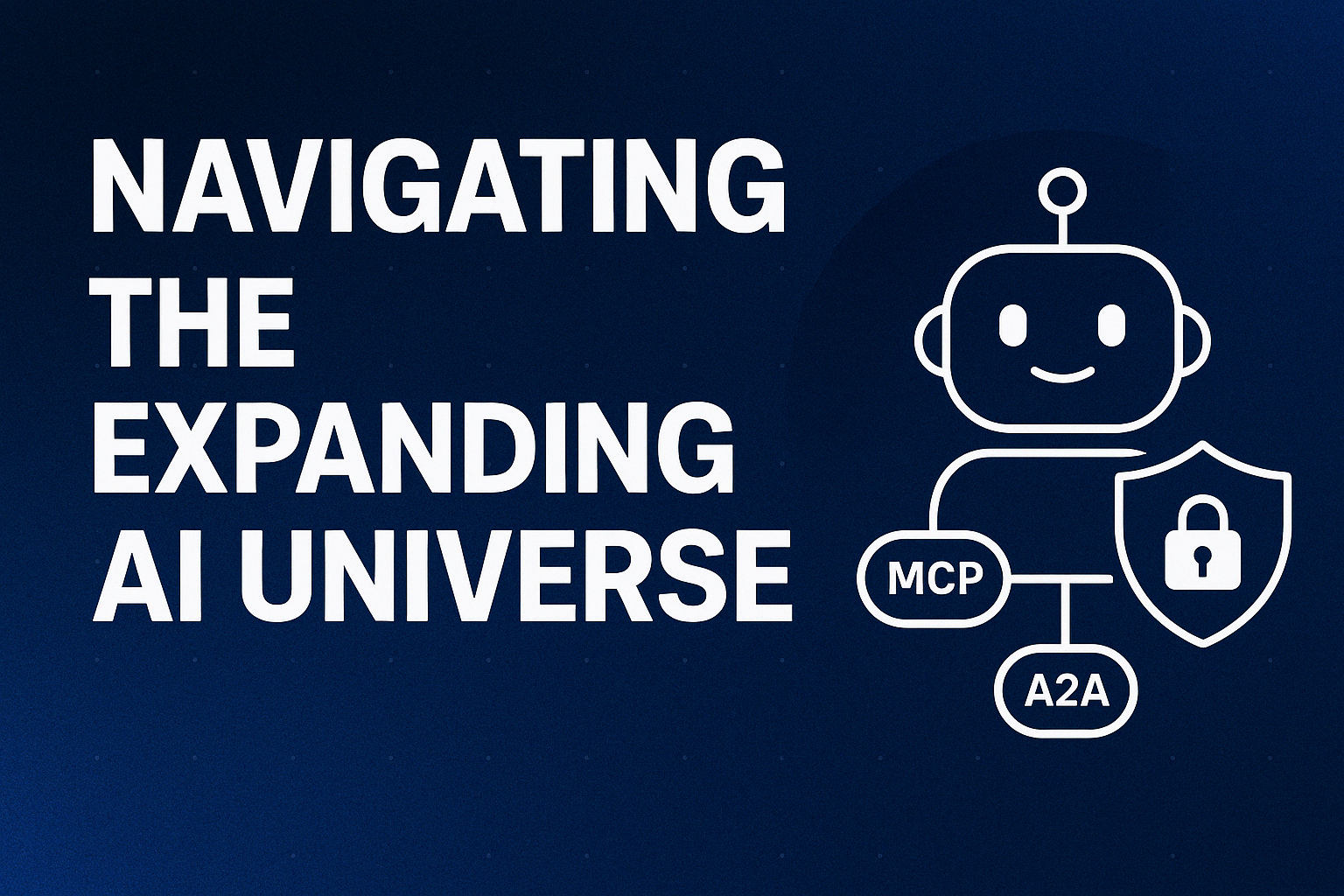

Anthropic's Model Context Protocol (MCP) serves as a crucial bridge, enabling Large Language Models (LLMs) and AI agents to securely connect with external resources such as APIs, databases, and file systems. Before the advent of standardized protocols like MCP, integrating external tools with AI often involved inefficient custom development for each tool. MCP offers a pluggable framework designed to streamline this process, thereby extending the capabilities of AI in a more standardized way. Since its introduction in late 2024, MCP has seen significant adoption in mainstream AI applications like Claude Desktop and Cursor, and various MCP Server marketplaces have emerged, indicating a growing ecosystem.

However, this rapid adoption has also brought new security vulnerabilities to light. The current MCP architecture typically involves three main components:

This multi-component interaction, especially in scenarios involving multiple instances and cross-component collaboration, introduces various security risks. Tool Poisoning Attacks (TPAs), as highlighted by Invariant Labs, are a significant concern. These attacks exploit the fact that malicious instructions can be embedded within tool descriptions, often hidden in MCP code comments. While these hidden instructions might not be visible to the user in simplified UIs, the AI model processes them. This can lead to the AI agent performing unauthorized actions, such as reading sensitive files or exfiltrating private data, as demonstrated in the scenario involving exfiltrating WhatsApp data via Cursor.

The underlying mechanism of a TPA is often straightforward. For instance, malicious MCP code could initially appear innocuous but later overwrite the tool's docstring with hidden instructions to redirect email recipients or access sensitive local files without the user's knowledge or consent.

Given these risks, several security considerations for MCP implementations are paramount:

To address these weaknesses, the MCP specification has been updated to include support for OAuth 2.1 authorization to secure client-server interactions with managed permissions. Key principles for security and trust & safety now emphasize user consent and control, data privacy, cautious handling of tool security (especially code execution), and user control over LLM sampling requests. Additionally, the community has suggested further enhancements like standardizing instruction syntax within tool descriptions, refining the permission model for granular control, and mandating or strongly recommending digital signatures for tool descriptions to ensure integrity and authenticity.

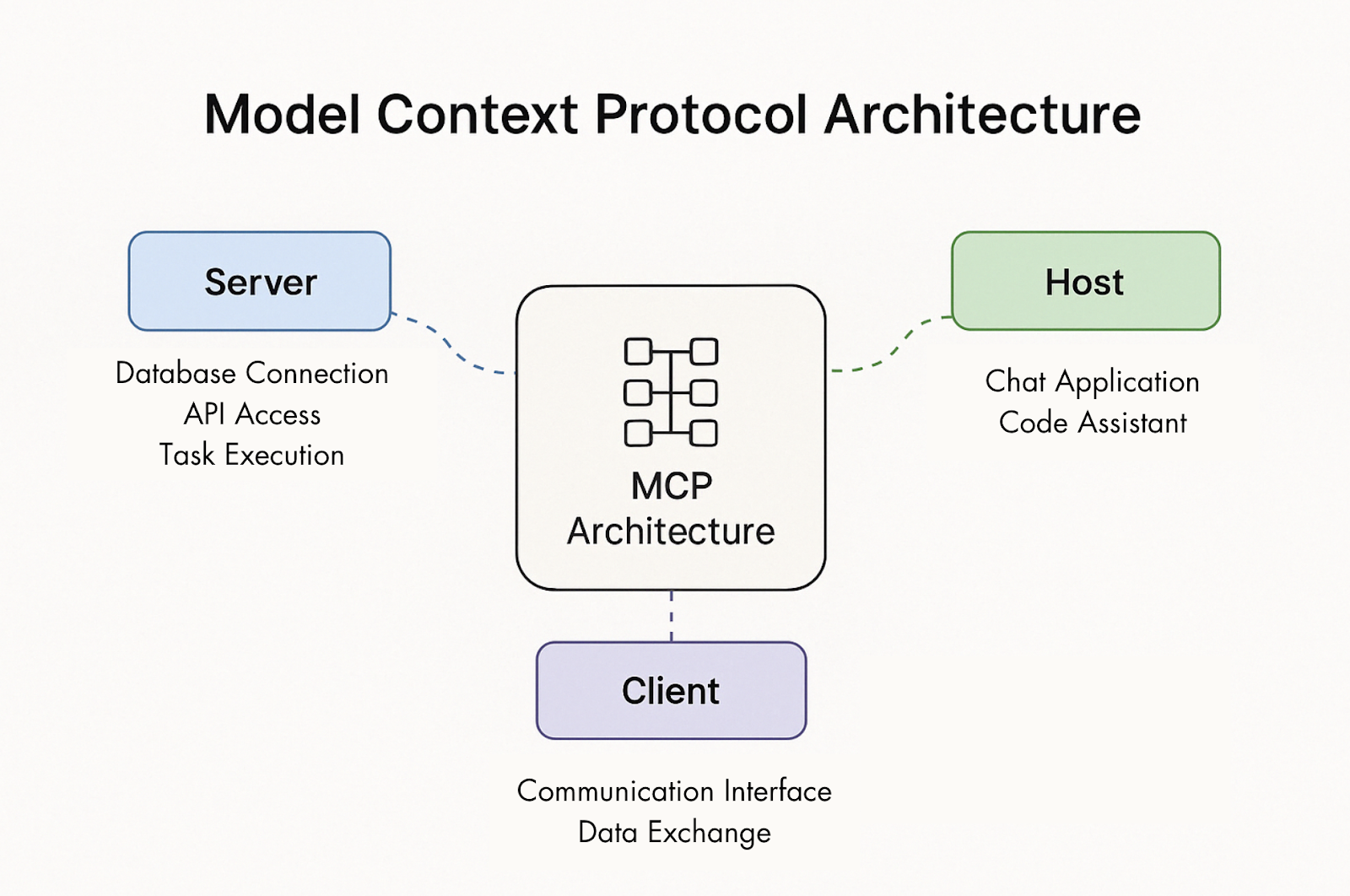

In contrast to MCP's focus on AI-to-tool communication, Google's Agent2Agent Protocol (A2A) is designed as an open standard specifically for AI agent interoperability, enabling direct communication and collaboration between intelligent agents. Google positions A2A as complementary to MCP, aiming to address the need for agents to work together to automate complex enterprise workflows and drive unprecedented levels of efficiency and innovation. This initiative reflects a shared vision of a future where AI agents, regardless of their underlying technologies, can seamlessly collaborate.

A2A is built upon five key design principles:

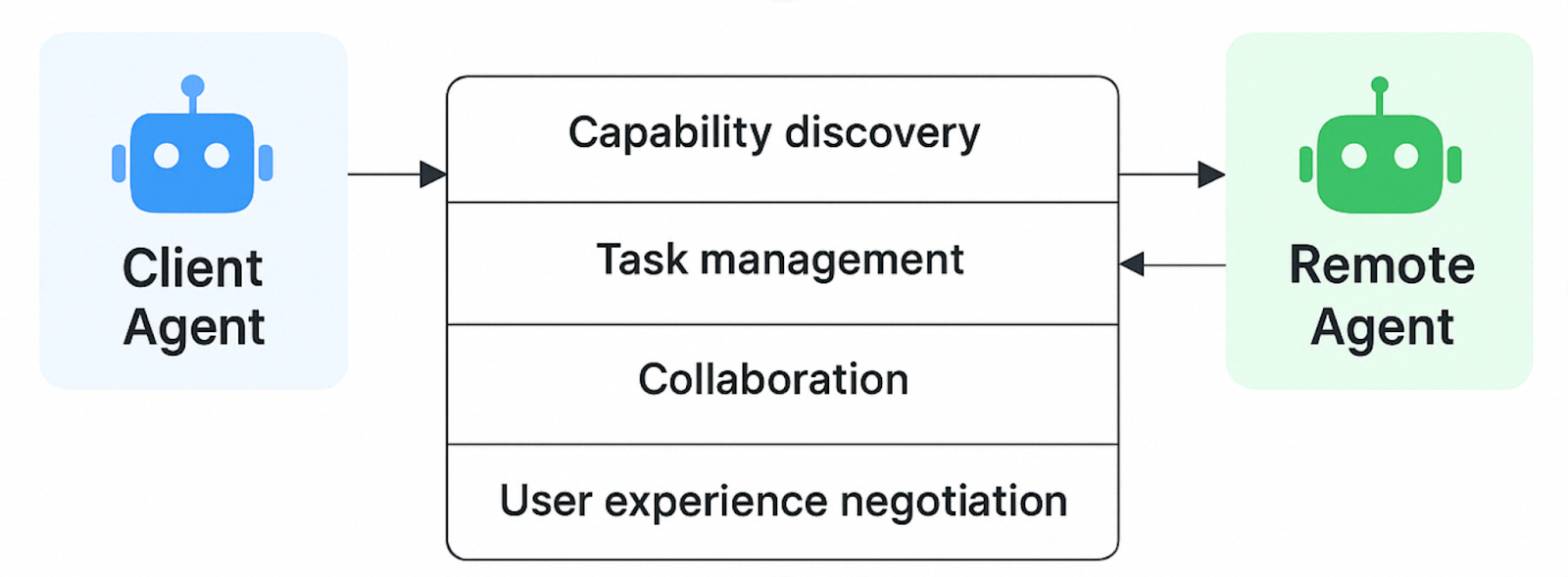

A2A facilitates communication between a "client" agent (formulating tasks) and a "remote" agent (acting on tasks). This involves several key capabilities:

A real-world example is candidate sourcing: a hiring manager's agent tasks sourcing and background check agents, all within a unified interface.

Google emphasizes a "secure-by-default" design for A2A, incorporating standard security mechanisms:

Compared to the initial MCP specification, A2A appears to have a more mature approach to built-in security features. However, its focus on inter-agent communication implies many A2A endpoints might be publicly accessible, potentially increasing vulnerability impact. Heightened security awareness is crucial for A2A developers.

The Google Developers Blog explicitly states that A2A is designed to complement MCP. While A2A focuses on agent-to-agent communication, MCP provides helpful tools and context to agents. An AI agent might use MCP to interact with a database and then use A2A to collaborate with another AI agent to process that information or complete a complex task.

Structurally, A2A follows a client-server model with independent agents, whereas MCP operates within an application-LLM-tool structure centered on the LLM. A2A emphasizes direct communication between independent entities; MCP focuses on extending a single LLM's functionality via external tools.

Both protocols currently require manual configuration for agent registration and discovery. MCP benefits from earlier market entry and a more established community. However, A2A is rapidly gaining traction, backed by Google and a growing partner list. The prevailing sentiment suggests MCP and A2A are likely to evolve towards complementarity or integration, offering more open and standardized options for developers.

As AI agents become increasingly autonomous and interconnected through protocols like MCP and A2A, the security of the non-human identities (NHIs) they rely on becomes paramount. NHIs – encompassing service accounts, API keys, tokens, and certificates – act as the credentials allowing AI agents to access resources and interact with other systems. The sheer volume and variety of NHIs within modern enterprises already pose significant management and security challenges. The advent of widespread AI agent interactions will only amplify these challenges and introduce new risks.

The security threats emerging with MCP and A2A underscore the urgency of robust NHI security:

Given the dynamic and often decentralized nature of NHIs, traditional security approaches are often inadequate. Unlike human users, NHIs frequently lack clear ownership or lifecycle management. Therefore, a dedicated focus on Non-Human Identity Security becomes a fundamental requirement for organizations embracing AI agent interoperability.

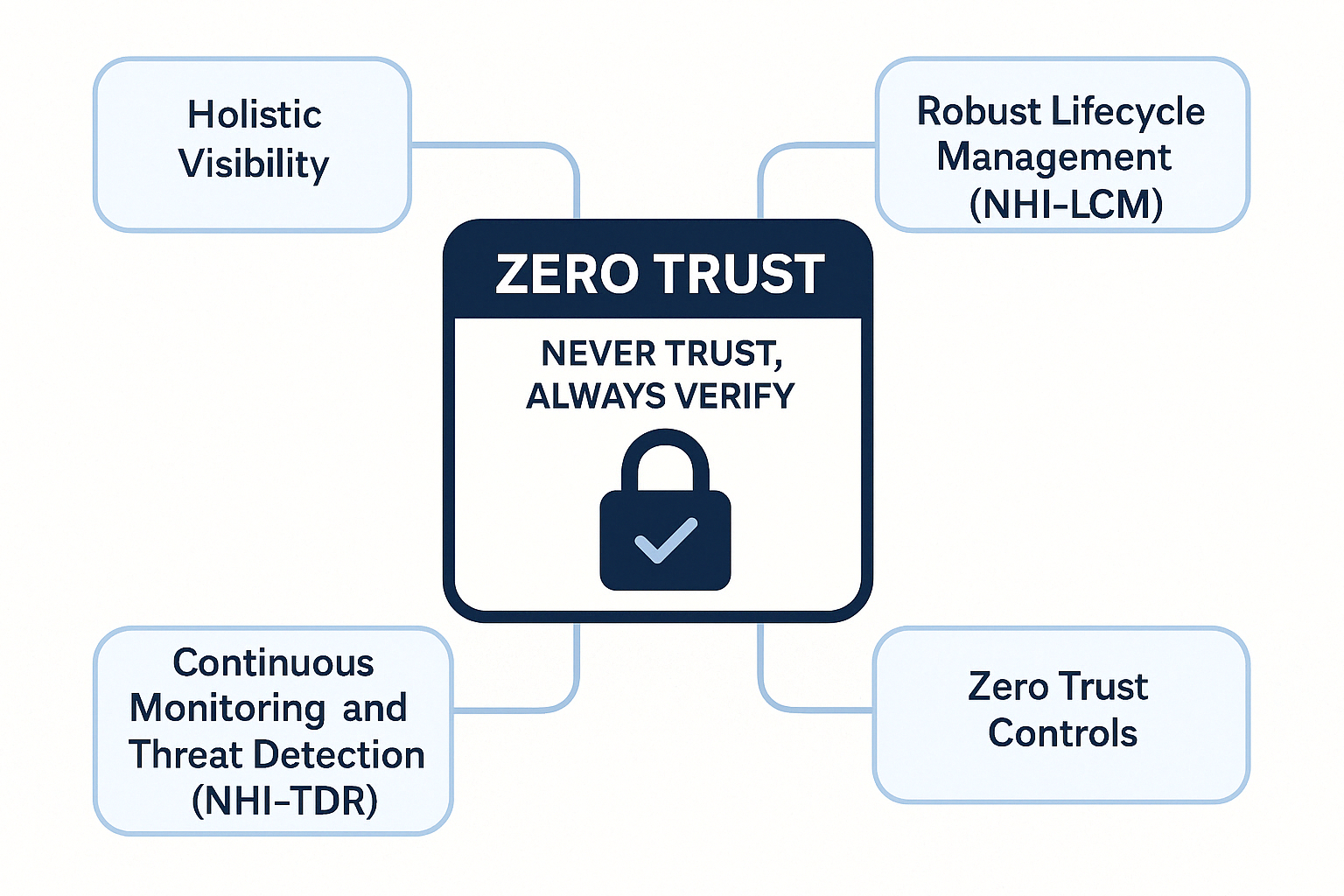

A Zero Trust security model ("never trust, always verify") becomes even more critical for NHIs in this landscape. Every access request from an AI agent utilizing an NHI should be continuously validated to minimize risk.

To strengthen your organization's NHI security posture, consider these strategies:

Companies like Cremit are specifically addressing these challenges by providing solutions focused on Non-Human Identity Security. Our NHI Security Platform helps organizations gain visibility into their non-human identities and manage their lifecycles effectively. Cremit's technology aims to detect and mitigate risks associated with NHIs, including those potentially exploited in MCP and A2A environments. For instance, Cremit is developing capabilities using platforms like AWS Bedrock (with Claude+MCP) to analyze NHI behavior and provide context-aware threat information. The platform aims to detect exposed secrets in development/collaboration tools and offer remediation guidance. Cremit's focus highlights the growing recognition of this critical need.

The emergence of protocols like MCP and A2A signifies a monumental leap forward in AI agent capabilities and interconnectedness. While promising transformative benefits, they also introduce new security challenges centered around non-human identities. Securing these often-overlooked digital credentials is no longer a secondary concern but a fundamental prerequisite for realizing the full potential of AI agent interoperability safely and reliably. By prioritizing and implementing robust Non-Human Identity Security strategies, organizations can confidently navigate this expanding AI universe, mitigate evolving risks, and build a more secure and intelligent future.

.png)

Need answers? We’ve got you covered.

Below are some of the most common questions people ask us. If you can’t find what you’re looking for, feel free to reach out!

We specialize in high-converting website design, UX/UI strategy, and fast-launch solutions for SaaS and startup founders.

Helping SaaS and startup founders succeed with conversion-focused design, UX strategy, and quick deployment.

Designing sleek, user-focused websites that help SaaS and startup teams launch faster and convert better.

We design and launch beautiful, conversion-optimized websites for ambitious SaaS and startup founders.